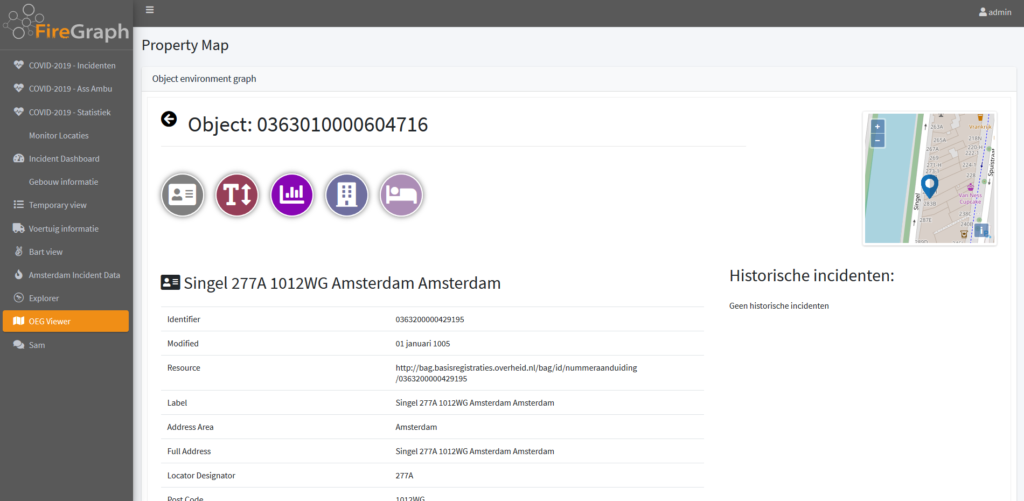

At Netage we are continuously working on improving our Knowledge Graph platform called Firegraph for fire departments. One of the components of FireGraph is the so-called “Object Environment Graph” (OEG). The basis of OEG is datasets about the environment the fire department operates in and core registries all linked with each other. Think of cadastral registries with buildings, address data, and parcel data. But also data about monuments, schools, daycare facilities, and specific risks (e.g. LPG tanks and fireworks stores).

Use Case: Improve community safety by finding similar locations compared to ones involved in recent incidents.

When a fire department creates an evaluation about an incident some of the information in the knowledge graph might be interesting or even crucial to know. I emphasize “some of this information”. The fire department will see no benefit in using ALL the data available while finding the cause of an incident and the actions to take to prevent similar incidents in the future.

The FireGraph knowledge graph contains a wealth of information about both past incidents and the environment in which they took place. It represents a huge body of knowledge that should be put to better use.

Consider the following case:

“A fire department has responded to a residential fire. The fire originated in the bedroom of an apartment on the second floor, with a child day-care facility on the first floor”.

After the fire was extinguished, the department conducted a research on the origins of the fire, the reaction of the people involved, the buildings involved, and how the department handled the incident. The insights gathered from this incident are possibly relevant for other similar objects.

Imagine that the day-care didn’t have enough emergency exits, due to the age of the building, which caused a lot of work for the units on the scene. Wouldn’t it be interesting to scan for similar objects with the same characteristics? Two-story buildings with a daycare on the ground floor, residential dwelling on the 2nd floor, and a similar construction year. When we find these objects we can plan risk reduction efforts for these objects and prevent future incidents!

Finding similar buildings is a crucial step for utilizing the knowledge which is gained during past incidents to improve the safety of the community in the future.

The technology behind this solution

We are actively developing the extension for our Object Environment Graph. Which uses the following three elements to make this work:

- SHACL, a W3C Recommendation for describing the shape of a resource in the knowledge graph which can be used to do the validation.

- SPARQL, a W3C Recommendation, is a query language for knowledge graphs.

- Stardog Graph store, an industry-standard enterprise-grade Graph Store with advanced machine learning capabilities.

Although SHACL is intended for validation we use it in a slightly different way. When a user has selected an object of interest he can create a shape describing that object by selecting various properties from the OEG, these properties can be across various datasets but all related to the OEG object

For instance: construction year, surface size, day-care size and day-care type.

Once the properties relevant to the question are selected a SHACL shape is created. This shape is used to construct a SPARQL query that selects all resources in our knowledge graph which have these properties. Mind you we now have all resources that have these properties, the actual values of the properties are not taken into account yet.

How do we find similar objects within this dataset?

We use the Stardog Provided concept similarity machine learning model (//www.stardog.com/blog/similarity-search). For similarity search, Stardog vectorizes the features and uses cluster pruning to groups similar objects with each other. Since the concept similarity uses a supervised machine learning algorithm we need to train it. That is where the result of the shape-based query comes into play. They are fed into the Stardog ML feature which creates a graph with the Model. The shape used to create the model is stored in the graph which contains the model.

prefix spa: <tag:stardog:api:analytics:>

prefix oeg: <//vocab.firegraph.store/oeg#>

prefix : <//schema.org/>

prefix bot: <//w3id.org/bot#>

prefix prop: <//example.com/proposed#>

prefix bag: <//bag.basisregistraties.overheid.nl/def/bag#>

INSERT {

graph spa:model {

:childcare_model a spa:SimilarityModel;

spa:arguments (?status2 ?bag_oppvlk2 ?bouwjaar2 ?gemiddelde_hoogte2 ?bouwlagen2 ?kindplaatsen2 ?lrkpType2);

spa:predict ?object .

}

}WHERE {

SELECT(spa:set(?status) as ?status2) (spa:set(?bag_oppvlk) as ?bag_oppvlk2) (spa:set(?bouwjaar) as ?bouwjaar2) (spa:set(?gemiddelde_hoogte) as ?gemiddelde_hoogte2) (spa:set(?bouwlagen) as ?bouwlagen2) (spa:set(?kindplaatsen) as ?kindplaatsen2) (spa:set(?lrkpType) as ?lrkpType2)

?object { GRAPH <//data.firegraph.store/oeg> {

?object oeg:hasAHN ?ahn ;

oeg:hasBuildingPart ?bp ;

oeg:hasLRKP ?hasLrkp ;

bot:hasBuilding ?hasBuilding .

?bp bag:oppervlakte ?bag_oppvlk .

?hasBuilding bag:status ?status;

bag:oorspronkelijkBouwjaar ?bouwjaar.

?ahn prop:gemiddeldeHoogte ?gemiddelde_hoogte;

prop:bouwlagen ?bouwlagen.

?hasLrkp prop:kindPlaatsen ?kindplaatsen;

prop:lrkpType ?lrkpType.

}

} GROUP BY ?object

}Now that we have trained the model we can use the object we started with as input for the model to find similarities, we again use the shape to generate the SPARQL query needed to query the model.

prefix spa: <tag:stardog:api:analytics:>

prefix oeg: <//vocab.firegraph.store/oeg#>

prefix : <//schema.org/>

prefix bot: <//w3id.org/bot#>

prefix prop: <//example.com/proposed#>

prefix bag: <//bag.basisregistraties.overheid.nl/def/bag#>

SELECT ?similarObjectLabel ?confidence

WHERE {

graph spa:model {

:childcare_model spa:arguments (?status2 ?bag_oppvlk2 ?bouwjaar2 ?gemiddelde_hoogte2 ?bouwlagen2 ?kindplaatsen2 ?lrkpType2) ;

spa:confidence ?confidence ;

spa:parameters [ spa:limit 15 ] ;

spa:predict ?similarObject .

}

{ ?similarObject rdfs:label ?similarObjectLabel }

{

SELECT

(spa:set(?status) as ?status2)

(spa:set(?bag_oppvlk) as ?bag_oppvlk2)

(spa:set(?bouwjaar) as ?bouwjaar2)

(spa:set(?gemiddelde_hoogte) as ?gemiddelde_hoogte2)

(spa:set(?bouwlagen) as ?bouwlagen2)

(spa:set(?kindplaatsen) as ?kindplaatsen2)

(spa:set(?lrkpType) as ?lrkpType2)

?object

{

GRAPH <//data.firegraph.store/oeg> {

?object oeg:hasAHN ?ahn ;

oeg:hasBuildingPart ?bp ;

oeg:hasLRKP ?hasLrkp ;

bot:hasBuilding ?hasBuilding .

?bp bag:oppervlakte ?bag_oppvlk .

?hasBuilding bag:status ?status;

bag:oorspronkelijkBouwjaar ?bouwjaar.

?ahn prop:gemiddeldeHoogte ?gemiddelde_hoogte;

prop:bouwlagen ?bouwlagen.

?hasLrkp prop:kindPlaatsen ?kindplaatsen;

prop:lrkpType ?lrkpType.

VALUES ?object { <//rescinfo.brandweeramsterdam.nl/data/kro/vraa/object/0363010000899097> } # The object to compare with

}

}

GROUP BY ?object

}

}

ORDER BY DESC(?confidence)Stardog returns a list of similar objects, which means the list consists of day-cares with the same like construction year, surface, day-care type, and day-care size complemented with a score, the actual similarity.

| “0363010000770790”@en | 0.4285714285714285 |

| “0363010001005607”@en | 0.2857142857142857 |

| “0384010000010321”@en | 0.2857142857142857 |

| “0363010000572927”@en | 0.2857142857142857 |

| “0363010011258950”@en | 0.2857142857142857 |

| “0363010000818562”@en | 0.2857142857142857 |

| “0363010000891696”@en | 0.2857142857142857 |

| “0363010001015026”@en | 0.2857142857142857 |

| “0363010000849339”@en | 0.2857142857142857 |

| “0363010012061069”@en | 0.2857142857142857 |

| “0363010000890302”@en | 0.2857142857142857 |

| “0363010000768963”@en | 0.2857142857142857 |

| “0363010000722086”@en | 0.2857142857142857 |

| “0363010000582462”@en | 0.2857142857142857 |

| “0363010000605335”@en | 0.2857142857142857 |

With this information, we would be able to find similar objects and put preventive measures in place. This kind of information could help the fire service to improve its overall service and act before incidents actually happen through outreach programs because the knowledge and the experience were already gathered somewhere else in the organization.

Using the same model on other resources is pretty straightforward, if the resource complies with the shape in the model graph, the shape can be used to construct the query which does the similarity search for the specific resource.

So while the use-case here is initially an analytical question it could be used in operations as well. Are there other incidents similar to the incident we go to which match the shape in the model graphs, and can we get quick hints from the results?

Limitations

One of the limitations found is that we were not able to apply the created model on a subset restricted by geometry. Within the context, we would like to know which similar objects are within a specific geometrical range. As an example, we would like to know which similar objects are situated in the same neighborhood as the current object. Not in the whole city. We would like to limit the search area by defining within geometry or an arbitrary geometric range of several kilometers. One simple solution would be to extend the initial response set and filter it after the similarity match based on geospatial location.

In the current version of the extension, the model needs to be created upfront. The user has to create models and Model training upfront before it could find similar objects. Ideally, the user selects an object and asks for finding a similar object, after which a model is created and executed based on the selected object.

Such construction would also work for a responding unit. While being dispatched, a model could be created and executed automatically based on the object where the incident is taking place. After which the returning data could be processed to show relevant information.

Practical use case

While working on this extension. The department I work for had a residential fire. The fire was situated in a rather complex building, a multi-apartment building. These buildings are complex in how the corridors are placed within the buildings and have rather small floors and rooms.

I was curious whether there are more of this type of building in this city and where these buildings are situated. I created a model based on the distinct properties of this building and executed it, with the ‘fire’ building as a reference object to compare with.

The results were very interesting

I found similar buildings across the city. Probably the blueprints were used multiple times during this period several decades ago. Various neighborhoods have the exact same buildings.

Future work

We are planning to take the extension to the next step. A major step would be to take action on one of the limitations. We would like to remove the step for the user to create a model upfront. We would like to have the system make a model on-the-fly based on the relevant input that is gathered during incident creation.

We would like to find a way to restrict similarity geometry based, to improve relevance.

Although finding similar objects needs still some research, it will bring some extra intelligence on top of the knowledge which is already somewhere in the organization. This knowledge-based on similar objects enables us to achieve better prevention and outreach as well as preventing responding units to run into similar issues that others did.

Excited about the possibilities?

Are you as excited as us with these possibilities, do you see a use case in your organization, let us know! you can find our contact details here